Google Webmaster is an amazing tool, and if you are a serious

blogger you should never avoid Google Webmaster tool. From SEO perspective,

it’s a life saver as it gives you the complete detail about your site in Google

search engine.

Webmaster

tool offers many tools that will let you analyze your blog thoroughly, and you

can work on that to make your blog ranking better in the search engine.

Remember search engine optimization is a gradual process and doesn’t happen

overnight. Sometime it take weeks, months to get proper search engine ranking.

I will not be taking about back-links and

other strategy for your ranking, instead in this post I will be sharing about

how you can use best free SEO tool call Google webmaster tool to optimize your

blog for search engines.

How to Make your Blog Search friendly using Search Console tool:

This is the first thing that you need to do.

Generate a sitemap of your blog and submit it to Google webmaster tool. This

will eventually help Google to crawl your site effectively, and you can keep a

track how many links are being indexed by Google. If you have not

submitted your sitemap till now, go ahead and submit your sitemap to Google webmaster tool.

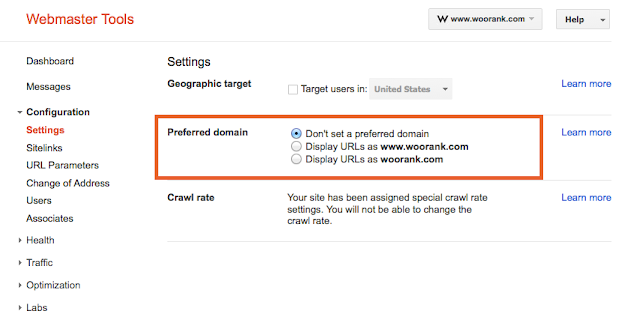

Webmaster settings enable you to configure some

important things like Geographical target. If your blog or service is limited

to one particular region, configure it for that. For example one of my client WordPress

blog is for UK Web Development services, so I configured her blog Geographical

target as UK. Same goes with your blog or service. If your blog targets globally,

leave it as it is. WordPress take care of WWW and non-WWW though I suggest use

Google webmaster tools to configure this setting as well. Crawl rate depends on

how often you update your blog. I always leave it on Google, though if you

update your blog many times a day or once in a week time, you might like to

play with this setting as well.

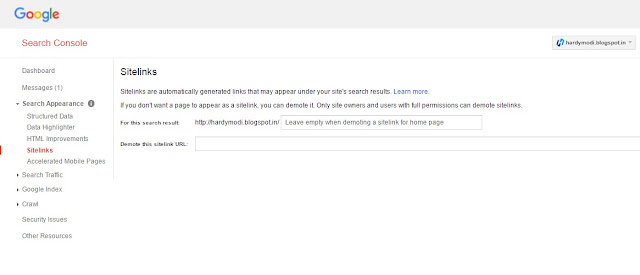

Site link are the pages from your site and website which Google find useful and from my experience pages you link the most define your site links. Sometime Google add less useful posts or pages like disclaimer and privacy policy as site link. In this case you can use this feature of webmaster tool to configure your site links. You can’t add links to be included into your site links from here but you can always block unwanted links.

Ranking for the right keyword is more important than ranking for the wrong keyword. If your blog is about shoes, and you rank for keywords like shirts and trousers and you are on the first page, such traffic is useless. Google webmaster keyword tool determine which keyword they found while crawling your site. If you are ranking for a wrong keyword, your strategy should find the cause and try to show the related and useful keyword to search engine.

There are many other tools like website speed, internal links, crawl errors that are equally important. I suggest you should spend some time inside your Google webmaster tool today and come back with your queries. Let’s make a good discussion thread about SEO using Google Webmaster tool.

Also, let us know which feature of Google search console tool you find most useful?